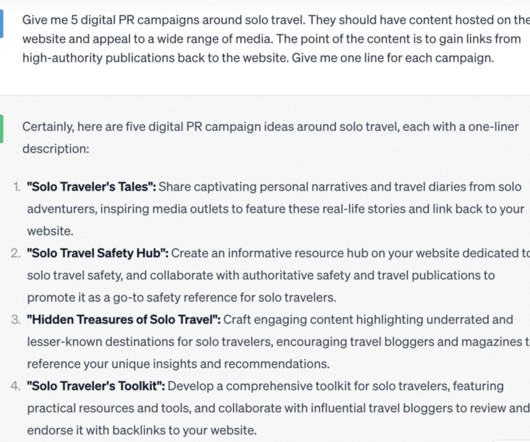

The Promise and Pitfalls of Chaining Large Language Models for Email

Tom Tunguz

OCTOBER 6, 2023

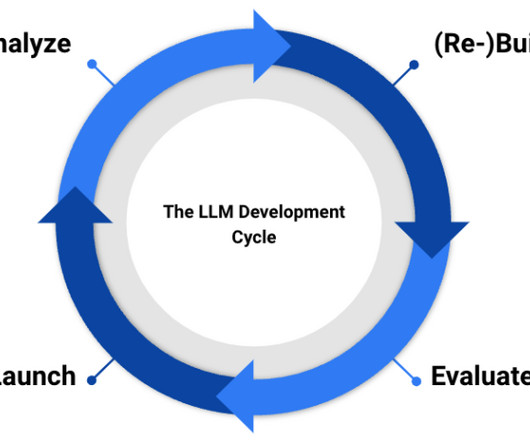

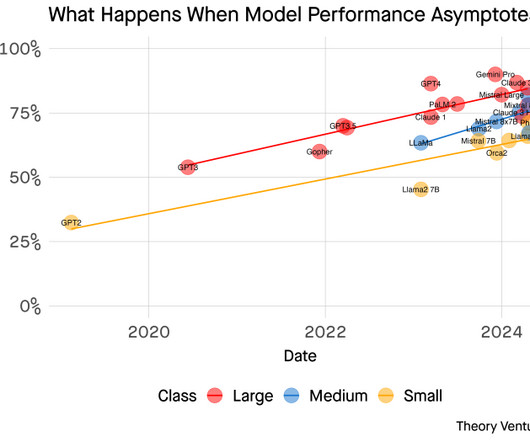

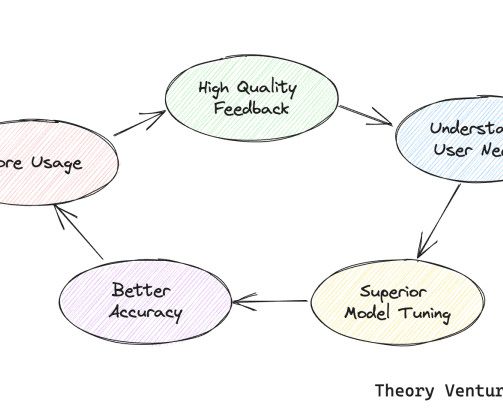

Over the last few weeks I’ve been experimenting with chaining together large language models. Bad data from the transcription -> inaccurate prompt to the LLM -> incorrect output. Tn machine learning systems, achieving an 80% solution is pretty rapid. I dictate emails & blog posts often.

Let's personalize your content