Fueled by the need to stay competitive, there seems to be a rush to implement AI. But this hype often prioritizes speed over substance, leading to solutions that don't actually add value.

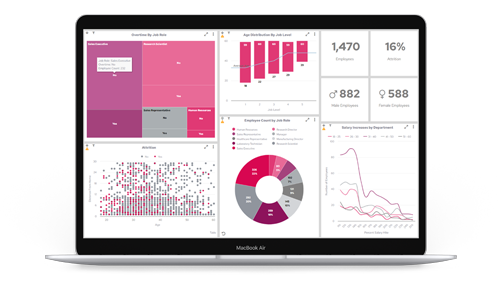

For example, if you’re looking for enhanced Business Intelligence, you need to combine AI with human intuition. This makes sure we are driving the best understanding of what’s really happening so we can get the best possible outcome—as opposed to something that's just statistical.

In a recent webinar with industry experts, we uncovered four key steps to successfully leveraging AI—which we'll dive into in this blog post.

Prefer to listen? Find the recording below.

AI implementation: why now?

Whether it’s chatbots, image detection, or predictive modeling like Causal AI—the media is rife with stories about AI application. AI has moved beyond the "Peak of Inflated Expectations" in the Gartner Hype Cycle and is now reshaping operations, decision-making, and customer interactions across industries.

But, this pressure to adopt is causing issues.

Zandra goes on to say: "However, these projects are driving limited value. They're not necessarily creating great experiences internally or externally, and that's not creating confidence or trust in AI. So there's a real friction between being first and doing something quickly, versus driving value”.

So, what should businesses do to operationalize AI?

4 steps to AI implementation

Step 1: Define business goals and objectives

Like most things, at the top of the list is defining clear business goals and objectives. What do you want AI to help you achieve? This is important because organizations can get dazzled by AI's capabilities and adopt it without a thoughtful vision for what they want to achieve. This approach often leads to AI projects that are technically impressive but fail to provide real business value.

So think about your use cases, the objectives, who would be using it, and how they need to use it.

For example: If we’re looking for pointers to future trends, patterns, or forecasts, then we're probably looking at branches of machine learning. Or, if we’re looking at “if we did this, what might happen”, then that's probably simulation.

Otherwise, it's just AI for AI's sake rather than a means to achieve meaningful outcomes. Anchoring AI to strategic business priorities from the start is vital to operationalizing it in an impactful way.

Pro tip. Hold user groups and forums to find out what they would like to see change, things they’re trying to achieve, and how it needs to work for their product. Plus, where it shouldn't be used (more on this later).

But the objectives can’t just be agreed by the front-line.

Katherine goes on to say: "If you don't have that kind of level of awareness and buy-in at the board level, you're losing strategic competitive advantage in the market. And it also really helps the company to be able to develop their risk appetites”.

Pro tip. Have the right people around the table at the very start so you can discuss which problems you should be trying to solve. For example, a CTO can present the use case for why AI is so important. This can help identify the best use cases for AI, which we’ll cover next.

Step 2: Identifying AI-ready use cases

Popular AI use cases include predictive maintenance, fraud detection, personalized marketing, and customer service chatbots. Then, once you’ve determined your need, the most important factor is assessing risk.

Why? Because the risk implications of AI significantly impact the potential use cases.

For instance, using AI for medical diagnoses is obviously much higher risk than using it for workplace task automation. That’s why organizations need to carefully consider the potential impact and public trust implications when deploying high-risk AI applications.

It’s fair to say trust is fragile when it comes to AI. Despite its potential to overcome human bias, the concept of it replacing humans is daunting. Plus, there’s the “black box” issue. If the technology seems unreliable, trust erodes quickly. In fact, according to Forbes Advisor, 42% of Brits are concerned with a dependence on AI and loss of human skills.

Pro tip. That’s why having human oversight is critical. Your people can evaluate AI recommendations to help prevent unintended consequences and maintain trust.

Ultimately, the user experience of AI solutions also needs to fit the specific context.

For example. A nurse may need a simple color-coded app for quick decisions, while a Formula1 engineer needs complex real-time analysis from AI. The form of the AI deployment must match the intended use case and user needs.

Overall, organizations must account for the risk level, build in human oversight for high-stakes AI, and tailor the user experience appropriately. Carefully considering these factors allows you to leverage AI's capabilities, all while maintaining ethics, reliability, and public trust.

Along with priority and risk, you’ll want to consider these key points when assessing AI use cases:

- Technological infrastructure: Does the current AI technology effectively tackle the specific challenge at hand?

- ROI potential: What is the expected return on investment? Prioritize projects that have driven the greatest benefits.

- Ethical considerations: Make sure the use case aligns with established ethical principles and does not compromise customer trust or go against privacy standards.

And data availability…

Step 3: Consider data

Once you’ve decided on your use case, your biggest hurdle then is making sure the data is ready to power the AI solutions effectively.

Data quality is crucial. It must be: accurate, complete, consistent, and unbiased.

Some key questions to consider include:

- Is our data in proper shape to drive the value we want from AI? For example, is it accurately labeled, structured, and comprehensive enough to effectively train AI models?

- How can we verify the data's quality and lack of bias? For instance, is there a fair distribution of data? This is key because inherent biases could skew the AI’s outputs.

Fundamentally, if the data isn’t good enough, the outputs of AI won’t be valuable—and could even be damaging as it creates false insights. And that’s where proof of concept comes in.

Pro tip. It goes without saying, as with any data project, data security is essential. So following security practices, data privacy laws and regulations is key (more on this later), backed by strong cybersecurity practices.

Step 4: Run Proof of Concept

Let’s be frank: the first models that anyone creates might not be very predictive. In fact, you might even say they're going to be rubbish. But they'll tell you something. And it's the feedback loop and the speed of that feedback loop that becomes so important. Because that's how we create much, much better models.

You just need to start somewhere. The issue is, sometimes businesses feel like they need to solve the entire problem from the get-go.

Panintelligence’s Ken Miller says it best; “my experience has taught me this over the years: You don't know which characteristics you should be collecting and which ones you should pull down on to build a more effective model, until you get started”.

You need to test pilot processes to get a feel for what’s needed.

Simple proof of concept example: Amazon

When Amazon was much younger, when you ordered from them, within an hour, they'd turn up on your doorstep with your shopping.

This was powered by someone sitting in a warehouse with a laptop with a queue of people in bike gear behind them. They would print out the order, the person on the bike would wheel it over, someone would collect the items, put them on the backpack and out you go.

This not only showed them whether this was going to be something that people wanted to use, or whether it was effective, but it actually helped them understand how they needed to build the engine and the elements that they needed to scale this. Key elements they probably wouldn’t have thought they needed before they started this venture.

Proof of concepts are key to driving ROI because, let’s be honest, there are cost implications of failed implementation:

When drawing up your proof of concept, you can ask more direct questions, like:

- What's their experience like?

- Do they understand it?

- Are they trusting it? If not, why not?

- What additional information do we need?

As Zandra says, this helps to shape the roadmap for implementation; “So that observation of ‘what people do with things in practice’ is super important to driving the value of it”.

Now, we’ve covered the key steps but there’s something else we need to cover: Regulation.

Preparing for AI regulations

With the popularity of AI and the worries around reliability and ethics, the approach has been questioned by regulators across many industries.

So, how will AI change with regulation? How will AI be regulated? And what should we keep an eye on?

Katherine says it best: "It’s very much going to be down to individual regulators to regulate this technology, which I guess is a good thing… But I think over time, it's worth recognizing that for the slightly higher risk AI, we'll see greater scrutiny, so greater levels of auditing, and also greater levels of regulation as well.

Hopefully, though, with the approach that the government's taken, what I would probably classify as quite mundane AI technology operating at a very low risk level, I’d think you should be able to continue unobstructed, which is a brilliant thing. So it's an area to definitely keep an eye on but we'll probably see movement on this sort of at the latter end of this year”.

While regulating AI sounds a little vague at present, there is one key thing to know: The steps in this blog help prepare organizations for regulation. And here’s why:

Now, we’ve covered quite a bit of ground in this blog, so let’s round up the key points.

Key takeaways: How to operationalize AI

Successfully leveraging AI starts with identifying the challenge you want to solve so that you then choose the right type of AI. From there, it’s about ensuring you’ve got quality data to power it.

Human oversight is critical to keep AI models transparent, ethical, and aligned to objectives. The "human-in-the-loop" approach ensures AI outputs can be explained, understood, and corrected if needed. This allows you to scrutinize AI to maintain integrity and value.

As AI use cases evolve, maintaining human involvement will guide adoption strategies and meet regulatory/societal expectations around AI. The goal is to enrich processes through explainable, auditable, and inclusive AI that serves society's interests and supports businesses in achieving their potential.